This is a guest post by our friends over at Nicereply. Jakub Slámka will teach why it’s important to ace your next customer feedback survey and which mistakes to avoid. Read on for a great post!

Surveys come in all shapes and sizes. Done correctly, surveys can improve all departments in your business:

- Your product team can use them to find product-market fit and get customer feedback.

- Your marketing team can use them to improve messaging and support a better sales process.

- Your customer service team can use them to make customers happier and more satisfied.

But because all these teams rely on surveys, it’s vital to be surveying customers correctly.

Are your customer feedback surveys the best they can be?

When you’re just starting out, getting feedback is vital. It’s easy for a business to set up a quick and easy survey.

But fast forward a few months and your quick and easy survey – that was supposed to be temporary – has now become a permanent part of your process. And that can leave you with a few questions:

- Is this feedback still relevant to what we’re trying to improve?

- Is this feedback helping improve our business?

- Is this feedback even useful any more?

Take a look at the top 4 mistakes you should 100% avoid when running customer feedback surveys.

1. Surveying too often

We know it can be tempting to want to survey customers about everything. We get it! You want to know what your customers are thinking.

But asking too frequently can lead to survey fatigue. This is where response rates go down and answer quality decreases because respondents are tired of being surveyed.

Instead of constantly asking your customers what they are thinking, survey smartly. That means only asking when necessary, and spreading surveys out through the year. Here’s how to do that:

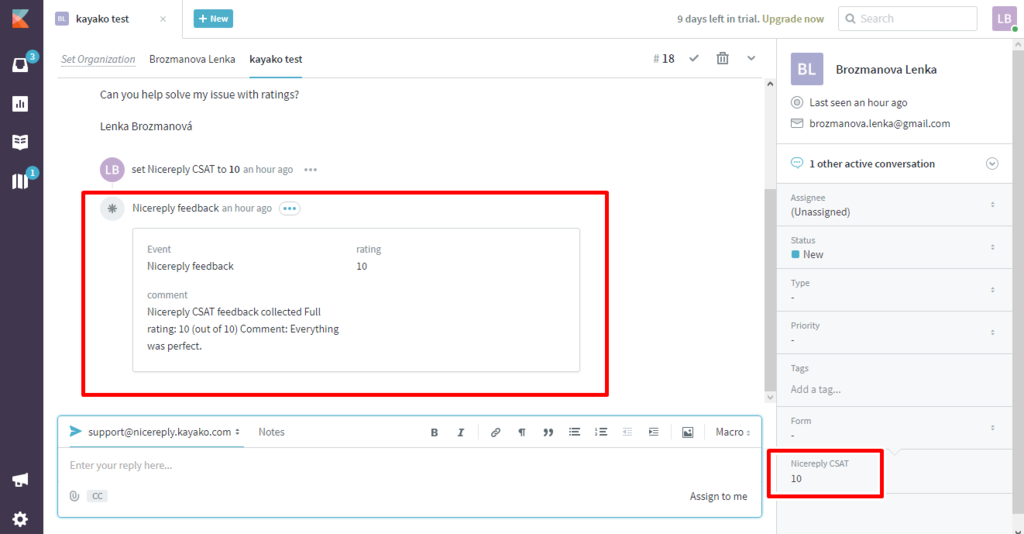

Use triggers to stop bombarding customers with surveys

Use your customer service software to set up triggers to prevent sending a survey to the same recipient multiple times a week. If a customer has already replied to a customer satisfaction survey, don’t send out another one the same day. Not only is it confusing, it can be a bit annoying.

Create a customer communication calendar

Create a company-wide calendar to prevent survey collision between departments. That way marketing, product, and support all know when other departments are going to be sending surveys and can plan appropriately.

Only ask for information you need

Keep surveys brief and don’t ask questions you either already know the answer to, or that aren’t necessary. For example, you already know how long they’ve been a customer, that information is tucked away in your CRM. Instead of asking a customer to provide it, simply combine the data you already have.

Be transparent and tell them why you want the information

Emphasize the value of each survey to the respondents. If customers understand why they are being surveyed, they may feel more strongly about putting their time into a response. Stress that every response will be read, and what actions your team takes from the results. You can even include a past change that was made due to survey responses – that’s what we do!

Hi Jenny!

It’s that time of year again! We send out a customer survey each February to understand how you use our product, and what we can do better this year. Our CEO reads every response, and it takes less than 10 minutes to complete.

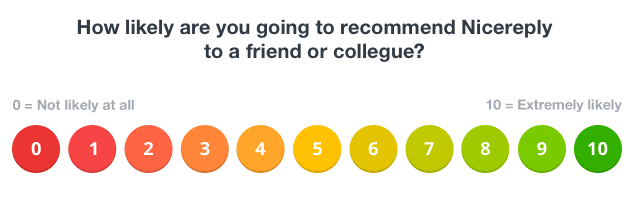

Start the survey by telling us… How likely are you to recommend Nicereply to a friend or colleague?

Last year, you told us that you needed more ways to control when surveys were sent. Over the last 12 months we’ve rolled out enhanced integrations with the most popular Help Desks. It was your feedback that made this happen!

Thanks for being a Nicereply customer,

Jakub Slámka

Chief Marketing Officer

2. Surveying without a plan or purpose

Surveys should be designed to answer a question. If you don’t know why you’re asking, or what you’re going to do with the information, don’t bother your customer’s with a survey.

For example, if you’re using a customer satisfaction survey but don’t have a plan to follow up after the survey, there’s no point. You might as well not take up your customer’s time.

When planning a survey, don’t just pick a question and hit send. Survey design should include the audience, the questions to be asked, integrated data and the follow-up plan. Consider:

- What happens with the responses?

- Who is responsible for analyzing them?

- Who follows up with detractors, or replies to unsatisfied customers.

The effort involved in a survey is like an iceberg. 20% of your time will go into setting up the survey and sending it off. 80% of the effort is in the follow up, analysis and acting on your new insights. The real work begins when the responses come back.

3. Using one customer feedback survey question

Should you be surveying and measuring customer satisfaction, Net Promoter Score, or customer effort?

Customer service leaders love to debate which customer feedback survey is the most effective for measuring customer happiness.

But it’s not about using one or the other. It’s about using them effectively, together.

NPS, CSAT and CES all tell you different things about the customer experience:

NPS measures customers loyalty over time, but it doesn’t tell you a lot about the why behind the score.

CSAT and CES are great transactional metrics – meaning they tell you a lot about a specific interaction. You can see which conversations went poorly, however, it doesn’t tell you much about the overall customer’s perception of your company.

But put these metrics together, and you have a 360 degree view of the customer experience. You can analyze:

- What’s causing friction

- Where customer service affects loyalty

- Where in the customer journey satisfaction declines.

This is why it’s important to branch out and measure more than one metric.

Adam wrote a great post about the process of layering NPS and CSAT or CES together to get a full picture of the customer’s journey. Check it out and get layering!

Using one metric means you’re only seeing one side of the story.

4. Ignoring response rate warning signs

When is a 90% customer satisfaction score better than a 95% customer satisfaction score? When the response rate is higher!

The response rate of a survey is the percentage of customers who have received the survey and replied to it. If 10 customers are surveyed, and 8 reply, the response rate would be 80%.

A high response rate indicates enthusiastically satisfied and engaged customers.

If you have trouble recruiting responses for your surveys, it should trigger a customer retention warning. Most companies should expect at least a 20% response rate (meaning 1 in 5 customers surveyed respond).

If you see your response rate stalling, there’s a couple things you can do to get it back on track:

- Try improving the survey format. For example, use one-click surveys where the response is collected instantly, rather than requiring the customer to press submit. When testing, we received 236% more ratings using instant ratings than surveys where customers needed to submit their response.

- Work constantly to build customer trust before surveying. This might be as simple as using their name when chatting to them. It might be sending them personalized resources to help them build their business. Maybe you follow them on social media and interact with their posts – even when it’s not directed at you. However you build it, customer trust will help you get those response rates up. Customers will see the humans behind your brand and know that any feedback they give will help create a better product for them.

Track your survey response rates over time and celebrate increases just like increases in NPS or CSAT. It means that your customers are more engaged and willing to give feedback, which is a beautiful thing!

When’s your next customer feedback survey?

If you’re thinking about customer feedback surveys, we can already tell you’re customer focused. You want to know what your customers need, and are taking the right steps to find out. Keep an eye on the four biggest faux pas above, and you’ll easily go from customer-focussed to customer-obsessed.

About the author:

Jakub Slámka is a CMO at Nicereply, customer experience management platform that measures CSAT, CES and NPS to delight and retain customers. Jakub loves books, rock music and everything geeky.

Jakub Slámka is a CMO at Nicereply, customer experience management platform that measures CSAT, CES and NPS to delight and retain customers. Jakub loves books, rock music and everything geeky.