One of the biggest mistakes when measuring customer experience is focusing on what your team is doing instead of what your customers are experiencing.

Metrics like time tracking, productivity, and performance take the front seat, but often don’t relate to the experience your customer receives or how they feel about it.

Average reply time, first response time, and the overall volume of conversations can give you useful insights into the productivity of your support but they each have flaws.

Productivity is not the only metric that matters

Average reply time is a useful management tool to help managers keep tabs on how many hours are being worked, make staffing decisions, and plan shifts.

But it fails in differentiating simple replies from complex, high-involvement responses. This penalizes agents who are efficient but may also need longer conversations to help customers through complex problems.

First response time helps you to understand the overall speed your support team is giving to customers, but customer demands and expectations vary across channels.

- Email customers demand a reply in under 24 hours

- Social support demands a reply within the first hour

- Chat support needs a response within 5 minutes at most.

First response time is often closely tied to customer satisfaction ratings.

Your first response time might say you’re replying to customers in under 24 hours, but your overall customer satisfaction rating might be at an all-time low because you’re leaving customers hanging on live chat and social media.

An insights panel or dashboard won’t be able to capture these detailed metrics. They don’t give us true insights into what results in customer loyalty, the true goal of all businesses.

So which important metrics should you be monitoring in your insights panel or dashboard?

Aim for the right loyalty goals

The perception has been for years that, exceeding expectations = customer loyalty. In fact, CEB found this to be incorrect.

Customers desire minimal effort to get an answer, and that’s what makes them come back to your business. High effort experiences result in 96% of customers becoming disloyal to a business.

To measure customer experience goes beyond simply meeting customers’ expectations with a speedy reply. It’s about a reply that’s full of context, is personal, and solves other potential issues before they happen.

What are customer experience metrics?

Customer experience metrics keep a tab on what your customers are experiencing in receiving help from your support team. They showcase whether the experiences you’re creating are friction-free or riddled with difficulties.

Customer experience should be treated as a KPI in understanding what makes a support experience great. Great support is more than just the results of your Net Promoter Score, customer effort score, or customer satisfaction surveys (though, it is worth running these surveys to keep tabs on overall loyalty). Often these surveys are used in isolation, so they only scratch the surface.

To dig deeper and get a fuller picture of the customer experience, you need to track a combination of metrics:

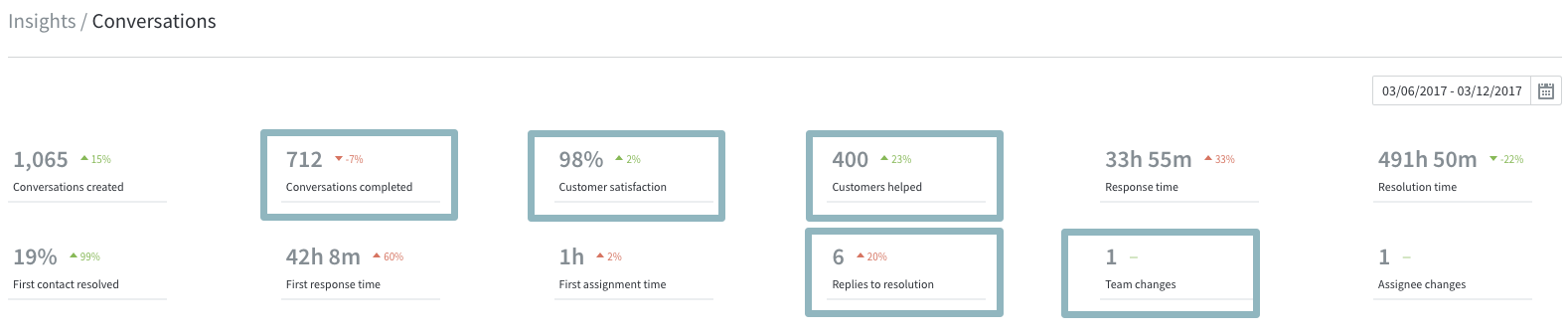

- Conversations completed

- Customers helped

- Replies to resolution

- Team changes

- Customer satisfaction

Conversations completed

To frame your team’s workload, you need one big-picture metric. Instead of the more common total conversations received, we recommend choosing the number of conversations completed.

Why conversations completed over total number of conversations? Well, check your support inbox. How many of the conversations there merit a response? I bet not all of them. Your support channels are likely to receive everything from spam, wrong company messages, internal company-wide emails, Twitter broadcasts, and poorly written guest post requests.

But there is value in comparing how big the difference is between conversations completed and total number of conversations.

If you are getting 10,000 support conversations, but only completing 1,000, your team is either dealing with:

- A massive amount of spam (or other issues) – if you work on clearing that up, it will save all your staff a huge amount of time.

- Your volume of inbound conversations is vastly overwhelming your support staff – and you need to see what you can do about that. Producing better self-service content to deflect some of the conversations is one solution.

- A legitimate volume of support conversations that your team aren’t able to get through by themselves – it might be that you simply need more staff.

From here, you can dig into details of what goes into an effortless experience.

Number of customers helped

The number of unique requesters you complete conversations for is the number of customers helped. It essentially adds up all the TO, CC, Twitter, Facebook, and live chat users to show how many customers have been helped in the given time period.

Ideally, the number of customers helped should be trending downwards. This means the improvements you’re making to your product or service – improving self service content, creating explainer videos, or ensuring better answers to customers – is working. If not, it means that more customers are getting stuck and need your help.

You then need to dig deeper. Say you spotted the number of customers helped is increasing by 10% every month, you need to find out what’s behind that.

- Maybe you released a product change that is tripping up customers?

- Maybe you’ve gained 10% more customers? Although that’s great, why did they all get stuck? You might need to fix your onboarding to make it easier.

Use the number of customers helped as your red flag indicator of satisfaction and loyalty.

Number of replies to resolution

A support conversation that turns into a huge amount of back and forth between the agent and customer is one of the biggest frustrations to customers. This is partly due to the missing context in most helpdesk software, agents struggle to reduce asking customers’ to complete steps they have already tried.

If you’re not using a modern support tool, aim for first contact resolution and next issue avoidance in every reply. Sure, you’re average handle time or time tracking is going to take a hit but does that matter if you’ve invested time in helping a customer get everything they need in your reply?

Having this clarity with customers results in a big win for your support department.

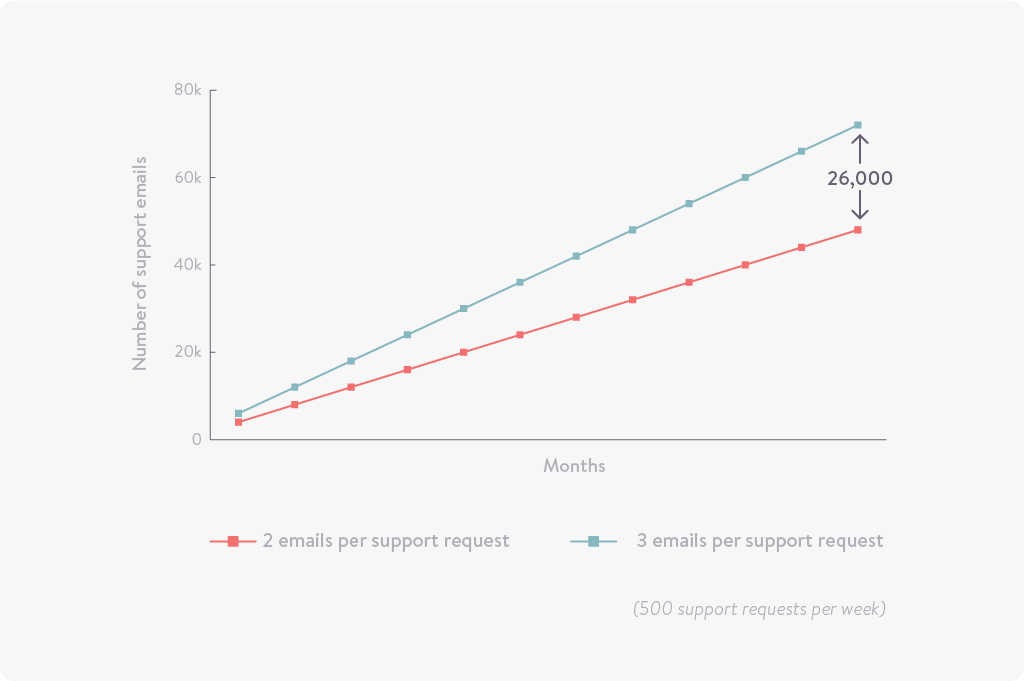

If you receive 500 support requests each week, and you reduce your number of replies by one in every interaction, that’s 26,000 fewer emails sent in a year.

Number of team changes

Customers become deeply frustrated by lots of transfers between support agents. Yet, 59% of customers are transferred during a customer service interaction.

Transfers contribute to a high-effort experience for the customer as they re-explain their problem to every new support agent.

While the support agent might not have knowledge in that area – and they think they’re passing them onto an expert, the customer sees this as you’re trying to pass off their problem. To counter this, assign conversations to teams not individual agents.

Customer satisfaction

Customer satisfaction keeps tabs on the support the customer received. Consider it as a post mortem metric to measure how the conversation went.

Measure customer satisfaction at natural pause points after interactions. This provides trends as a result of business changes and improvements.

We recommend sending customer satisfaction surveys 24 hours after a conversation has been resolved by email – but immediately after live chat conversations.

Map the customer experience to achieve success

The 5 metrics above are at the core of keeping the pulse on a successful customer experience.

But to understand your current success and where and how to improve, you need to map your customer experience.

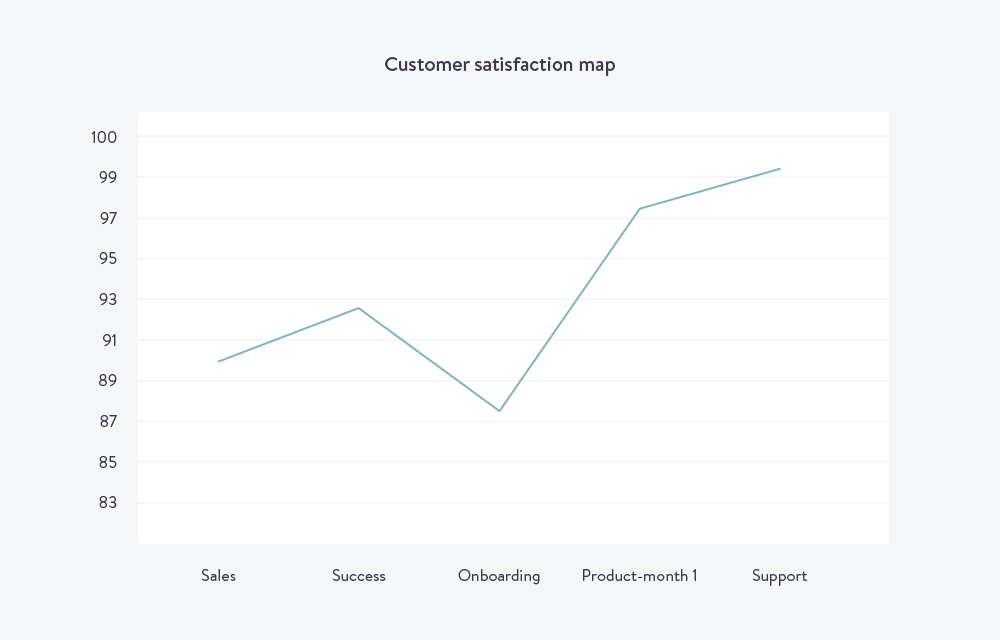

This is where your customer satisfaction score can play as a vital insight into the experience you’re providing customers. Through transactional data you’ll be able to map satisfaction across:

- Your product or service.

- Across each channel you offer support.

Understand the experience with your product and service

Analyze your customer satisfaction survey and map out the parts of your product causing the most dissatisfaction among customers.

Pulling out your satisfaction scores and segmenting them into groups for analysis will help you visualize what your customers are experiencing. You will be able to identify tactics in the areas that are working or the ones that need improvement.

You can then start to analyze which teams, channels, product areas, and types of interactions are more successful than others. Identify elements that can be ‘borrowed’ for quick wins, and see where further investment is required.

As in the graph above, we might want to investigate how to improve the consistency of the post purchase journey, through sales, success, and onboarding.

We might use a Customer Success Manager to better link the sales and support process by:

- Understanding customer needs to help them get setup on your software

- Better success and support team handovers

- Customizing workflows and setting them up with a good account manager

How to use the data to improve your own services

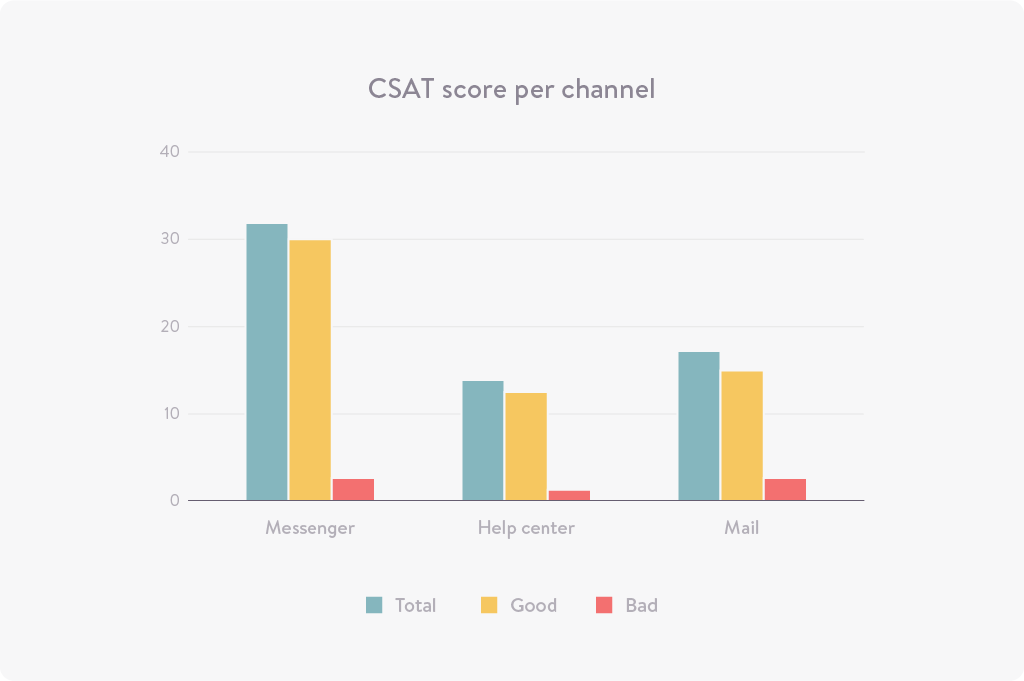

Mapping CSAT score per channel allows you to see where you’re not keeping a good level of service.

Ideally, run this report bi-weekly and report the success and proposed improvements company-wide.

The report aims to analyze key trends on how you are receiving satisfaction ratings to better understand and shift your focus toward improving customer experience. It also reflects on the common trends of negative rated conversation.

Your aim is improve your ratings across each channel you support.

Then you can tear down into a qualitative analysis to determine the issue and how you can improve. Do this by capturing 5 random samples of positive and negative feedback in a table:

Random samples of conversations receiving negative customer experiences:

| Conversation ID | Survey Comment (if any) | Observations | Proposed improvement |

| 2221412 | Issue is not resolved | The user was getting an error in email parser because of which the emails were not coming through | Nothing needed to be done in this case because it was a duplicate email resulting in a new conversation. Two emails were parsed at the same time but somehow, one of them ended up creating a new conversation. So we closed this case citing a reference to the original case. Survey was sent to client because we didn’t add “merge” tag and instead added merged-case tag. |

Random samples of conversations receiving positive customer experiences:

| Conversation ID | Survey Comment (if any) | Customer’s requirement | Observations |

| 2219306 | The user wanted to track the changes made to user organizations by his staff members. | – The solution, while not being obvious at first glance, was offered promptly and made the customer happy.

– The secondary issue with the log being too short was solved in the same very timely manner. – The general tone of the conversation was friendly and helpful – The customer was given visual diagrams and that’s what they needed. That usually makes the customer experience easier and more pleasant. – The whole case took less than 2 hours, which considering the workload, is awesome. |

Doing this allows you to see how you can make improvements over:

Make your customer experience the best in class

Average handle time and first response time give a useful overall impression of your support, but they don’t go far enough to highlight the quality or effort needed in giving personal or proactive support.

Even today, support departments are trying to shake the notion that they’re a cost center to the business. While speed is often seen as the be all in support, it doesn’t always translate to satisfaction or even loyalty. But how do you even know? That’s where tracking your customer’s experience can be a source of new and untold rewards.